GPT-4 And The Future

Future Telescope 6

This flaque track is great to play in the background as you read today’s edition:

You may remember how I discussed my attempts at coding a “Mahabharata GPT”. While I didn’t taste success with my attempts, it seems that someone succeeded in coding a ChatGPT-like model on the Bhagavad Gita!

Enter GitaGPT.

Religious applications like the Gita GPT (or the Bible GPT, or the Quran GPT) are just one way of using this technology. With today’s launch of GPT-4, we saw a whole host of other practical applications of GPT tech in the real world. These practical applications and its implications are what we will talk about in today’s edition.

1. GPT-4 shows up.

Even before the release of ChatGPT or DallE-2, speculation was rife about the release of GPT-4. Everything was up for discussion, from its size to its capabilities.

Now that GPT-4 has been released, there is a lot of information about it available on OpenAI’s website and in this brilliant technical report. By the way, given that GPT-4 has been trained on data till September 2021, how prescient was this tweet from June 2021.

GPT-4 is super capable in many ways, but the key things that jump out to me are:

It can emulate writing styles. Just give it a sample of someone's style and watch it go. It can even write like you!

It can read a lot. 25000 words at a time. You can feed it a novella and it will still ask for more!

It can see things too. It can take images as inputs and generate witty captions, labels, and critiques.

There’s more on GPT-4 out there than I can possibly cover. Here are a few links to help you get up to speed:

⬇️

Ben’s newsletter and twitter feed are goldmines for AI development examples.

⬇️

⬇️

2. IRL

Worth noting are the limitations on what is available to users today. Neither the ability to use image inputs nor the ability to read 25000 words at a time have been made available as of today.

Also, accessing GPT-4 costs $20 per month since you need a ChatGPT+ subscription. So if you don’t have a specific use case for it yet, Bing AI is now open to everyone. It uses GPT-4 under the hood, so you should be good.

Watch this video by Matt Wolfe to know more about what capabilities of GPT-4 can you use today (15/16 March 2023):

Also a good watch is this video from the YT channel “AI Explained” that looks at the technical paper released with GPT-4 and synthesizes 14 takeaways for us:

The last couple of points highlight some of the emergent capabilities that such a powerful system can develop. GPT-4 can and does display “agent-like” behavior - i.e. an ability to work in manners that advance goals that it can set for itself. The team at OpenAI tasked with testing such capabilities gave it money and an ability to talk to people, and it seems that GPT-4 was capable of acting in duplicitous ways. Example:

3. Catalogue

When GPT-3 came out in 2020, it kicked into high gear a new kind of economy. One built on generative content. It brought to life startups like Jasper and Copy.ai, that eventually went on to command high valuations.

When DallE-2 came out in May 2022, it kicked into high gear its own kind of economy. It brought to life startups like Midjourney and changed the way tools like Canva and Photoshop were used.

When ChatGPT came out in December 2022, well, the world went nuts.

With GPT-4 out in the wild now, there is going to be another wave of economic opportunities - this time in app and website development. People are already showing off capabilities like:

Coding games:

Coding iPhone apps:

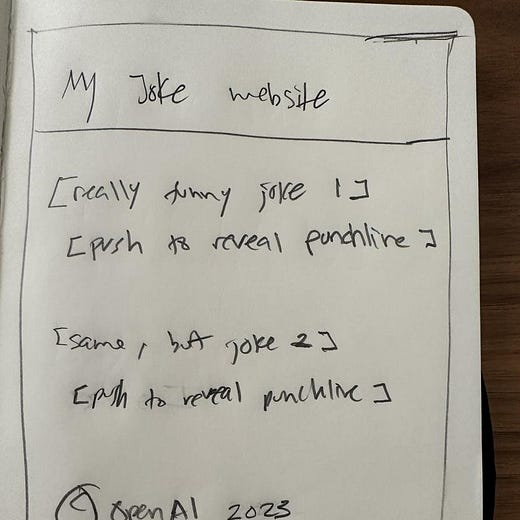

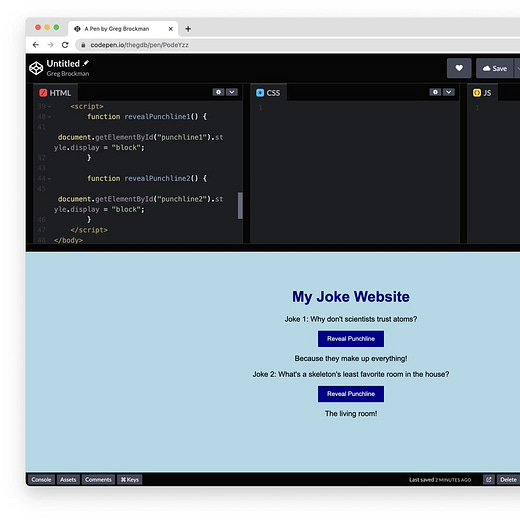

And of course, the viral Greg Brockman demo of building a website with a napkin sketch:

This is the world we are stepping into. A world full of possibilities. A world full of breathless innovation. Are you ready to step right into it?

That’s all for the sixth draft. See you next week.

This is a technology that requires an immersion and use to get on top of. I am a novice but I'm using the hell out of it. I've used the first itteration to summarize my own essays so that I can see if the right points are coming out (GPT4 is much better at this.